Vassilis Routsis introduces an exciting Future Data Services project harnessing AI to improve access to census flow data.

Vassilis Routsis introduces an exciting Future Data Services project harnessing AI to improve access to census flow data.

CORDIAL-AI is a UKRI-funded pilot project under the ESRC Future Data Services programme, aiming to make complex datasets more accessible to researchers and policymakers.

It explores how advancements in Large Language Models (LLMs) can enhance data services and applies this cutting-edge technology to some of the UK Data Service‘s most intricate datasets.

What is census flow data?

The pilot focuses on census flow data, which includes the movement of people between two locations. These datasets are important for planning infrastructure, such as schools, hospitals, housing, and transport networks, as well as for aiding businesses in strategic decisions, such as choosing locations for new stores.

Despite their significant utility, census flow datasets are less well known than standard aggregate data. They are more challenging for non-specialists due to their detailed geographical breakdowns, complex data relationships, and variables that often combine multiple topics within a single table.

The UK Data Service is the trusted repository for census data from the UK’s statistical agencies, National Records Scotland (NRS), Northern Ireland Statistics and Research Agency (NISRA), and the Office for National Statistics (ONS). It is the only online provider of access to both safeguarded and historic census flow data, currently covering censuses from 1981 to 2021.

The idea for CORDIAL-AI grew out of my work at the UK Data Service, where I support researchers and policymakers in accessing and analysing census data. It quickly became apparent that limited domain knowledge and technical expertise often stand in the way of the broader use of these valuable datasets.

Moving to a new API-driven platform

For almost a quarter of a century, we have relied on our very successful tool, WICID, to disseminate census flow data. Initially released in the early 2000s and continuously updated since, WICID has served users well, but it is increasingly difficult to maintain and scale.

To meet current and future demands, a completely new API-driven platform is being developed from scratch, as no existing solution or CMS could meet the complexity and sub-setting requirements of flow data.

This new system is designed to modernise how census flow data is discovered and retrieved, using the latest technologies and best practices. It is also hoped to improve interoperability between the different UK Data Service systems.

The API is already being used for internal purposes, and the whole platform, complete with a user interface, is on track for public launch by the end of 2025. With a modular design and seamless integration with AI technologies, it promises a significant step forward. We will be sharing more about this in future blog posts.

How CORDIAL-AI works

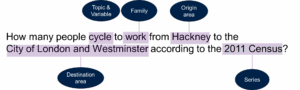

CORDIAL-AI builds on this new platform by introducing a natural language query system powered by Large Language Models (LLMs). This allows users to ask intuitive, plain-English questions and receive clear, structured responses, making census flow data far more accessible.

For example, a researcher might ask, ‘Show me migration flows from Manchester to London among people aged 20–35 according to the 2021 census’, and receive verifiable, ready-to-use data without having to navigate through various data selection screens. This can also help more experienced researchers save time.

Behind the scenes, we have developed a set of AI agents that work together, each reflecting different layers of how census flow data is structured. At the top level are broad dataset groupings, such as the 2001, 2011, and 2021 censuses, or others like the school census. From there, we move into data families, like migration, commuting, or second-address tables, and finally into more detailed layers involving themes, topics, variables, geographies and areas.

These agents use LLMs to interpret user questions more effectively than traditional approaches based on heuristics or traditional machine learning named entity recognition techniques. When there is uncertainty, the agents make the most likely assumption and inform the user; if the query is too ambiguous, they request clarification.

Integrating the natural language query with the API

The agents do not process the raw census data directly; our underlying database exceeds 300 GB. Instead, their job is to translate natural language questions into precise API queries. The API then handles the heavy lifting, performing complex data transformations and returning the correct results.

To support this, we have integrated technologies like ElasticSearch into the API, which the LLM agents use to manage and search our vast geographical metadata. For example, a single geography can include up to 250,000 areas (Output Areas), and our database holds about 160 distinct geographies across various censuses.

The LLMs, supported by custom functions, help identify what the user is asking for, whether it is specific locations, all areas, nested areas, or aggregations of geographies, and translate that into an API query the system can execute efficiently.

Traditional Retrieval-Augmented Generation (RAG) approaches, which rely on retrieving and generating responses from chunks of text, proved to be not well-suited to this kind of data. The presence of large, complex, and overlapping code lists makes it challenging to represent or retrieve the relevant context in a way that is both accurate and performant, particularly when a precise data subset is required.

The process – with a human element

Our current focus is mainly on the 2011 and 2021 census data, as these are in highest demand. To improve how our agents handle user queries, we have developed pipelines to create robust training datasets comprising both real human queries and synthetic examples. Work is also underway to improve the quality of the metadata itself.

The process starts by drafting questions that a typical user might ask and pairing them with ideal API responses. We then use AI to break these into tailored training datasets for each agent involved in the system. To broaden and enrich the training material, we further augment these datasets using generative AI, which helps the models generalise better across different types of queries.

The human element is critical, as users come with varying levels of experience, ranging from complete newcomers to seasoned data specialists.

To reflect the diversity of user experience, two Master’s students from UCL’s Digital Humanities programme joined us for a placement in May 2025. They contributed valuable real-world queries that helped expand and enrich our training data.

At first, their questions reflected the perspective of inexperienced users. As they became more familiar with the datasets, they began formulating queries that closely resembled those of more advanced census researchers.

Until recently, we relied solely on OpenAI models like GPT-4o and 4o-mini. We are now in the process of fine-tuning our own locally hosted models, including Llama 3.1 8B and Qwen 2.5 7B.

Early evaluation benchmarks suggest that, once fine-tuned, these local models outperform the base version of GPT-4o, though GPT-4o still has the edge in handling unfamiliar query structures and maintaining conversational fluency, especially if they are paired with reasoning models like o3.

Further plans

We plan to continue refining smaller local models, while also exploring fine-tuning options with cloud-based models like GPT-4o. We aim to offer both local and cloud options; however, local open-source models are especially important for use cases involving potentially sensitive data, where in-house hosting is necessary.

Models in the 7-13 billion parameter range strike a good balance; they are powerful enough for our needs but can still be run on mid-range gaming GPUs and hosted cost-effectively on local or secure cloud-based infrastructure.

We are also exploring ways to make both the interface and LLM outputs more explainable and transparent. This includes providing helpful context and chain-of-thought reasoning, so users understand how the system arrived at its results.

Our strongest safeguard against the black-box character of LLMs, hallucinations or other types of inaccuracies caused by them is the API itself, which ensures that all returned information can be independently verified.

Looking further ahead and responsible innovation

We have plenty of ideas for expanding this work, but as a one-year pilot, CORDIAL-AI is naturally time-bound, with funding ending in August 2025. We are very pleased with the progress made in such a short span, though we recognise that both the interface and underlying models have limitations we would like to work on further.

Looking ahead, we are keen to enhance conversational capabilities, improve interpretability through explainable AI, and develop more flexible orchestration across our agentic systems. This is a very fast-moving field that demands ongoing development to keep pace.

We are also considering how to improve transparency around the sustainability aspects of such technologies.

The experience so far has been immensely valuable, not only in building technical know-how but also in allowing us to run benchmarks, test experimental approaches like model distillation, and explore new ways to improve human-computer interaction for navigating complex datasets in social science research.

We aim to give greater visibility to these datasets and help democratise access. While there are valid concerns surrounding this kind of technology, including ethical, economic, societal, and environmental considerations, it also holds considerable potential.

It is important to engage with it thoughtfully, finding practical and meaningful ways to utilise it in research and beyond, in ways that genuinely serve the public good. As future data services take shape, it is not just about keeping up with the technology; it is about shaping it to reflect the values we care about.

About the author

Vassilis Routsis is a Senior Research Fellow and Lecturer in the Department of Information Studies at UCL. At the UK Data Service, he leads work on census flow data, providing user support and training, and driving research and development on modern tools and infrastructure. He is the Project Lead for the CORDIAL-AI pilot.

See his profile here.

Comment or question about this blog post?

Please email us!